Significant Difference Calculator

Test if your A/B test led to statistically significant changes

In the world of business analytics and research, understanding whether a change or difference is meaningful can be crucial for making informed decisions. This is where the concept of significant difference comes into play. Whether you're evaluating the effectiveness of a new marketing strategy or comparing customer satisfaction scores, determining if the observed differences are statistically significant can provide valuable insights.

Use-case

Imagine you want to determine whether a new teaching method significantly improves student test scores compared to the traditional method of teaching.

For that, these are the hypotheses for your research

- Null Hypothesis, H0: There is no significant difference in the test scores of students taught using the new teaching method compared to those taught using the traditional method.

- Alternative Hypothesis H1: There is a significant difference in the test scores of students taught using the new teaching method compared to those taught using the traditional method.

Input Values for the Calculator

- Number of Responses (Group A - Traditional Method): 100 students

- Number of Responses (Group B - New Teaching Method): 100 students

- Percentage That Passed (Group A - Traditional Method): 60% passed

- Percentage That Passed (Group B - New Teaching Method): 75% passed

By inputting these values into the significant difference calculator, you can determine if the difference in the pass rates (60% vs. 75%) is statistically significant.

The calculator indicates that the difference between the two groups is statistically significant. This means that the higher pass rate in Group B (students taught using the new teaching method) is likely due to the new teaching method and not just random variation.

Using the calculator, it makes deriving insights much easier given the math. Some takeaways that you could have are as follows:

- Implementation Decision: Since the new teaching method significantly improves student test scores, the school may decide to adopt this method across all classes.

- Further Research: Additional studies could be conducted to identify specific elements of the new teaching method that contribute most to the improved performance.

- Policy Changes: The school administration might consider training teachers in the new method and integrating it into the curriculum.

Using a significant difference calculator helps educators and administrators make informed decisions based on data. In this case, the statistically significant difference between the two groups' test scores shows that the new teaching method effectively enhances student performance. This tool can be applied to various fields to assess the impact of different interventions and strategies.

What is a Significant Difference?

Significant difference refers to a statistical term used to determine if the difference between two or more groups is not due to random chance. When a result is statistically significant, it means that it can be taken that the observed difference is likely genuine and not a fluke.

The calculation usually provides a p-value, which is the probability of observing results as extreme as those in the data. This is based on the assumption that the results are truly due to chance alone.

For our significant difference calculator, it directly informs you if your results are statistically significant or not.

What is P-Value?

A p-value is a measure of the probability that an observed difference could have occurred just by random chance. When the p-value is sufficiently small (e.g., 5% or less), then the results are not easily explained by chance alone and the null hypothesis can be rejected.

When the p-value is large, then the results in the data are explainable by chance alone, and the data is deemed consistent with (while proving) the null hypothesis.

What are null and alternative hypotheses?

The null hypothesis H0 is where you assume that the observations are statistically independent, meaning no difference in the populations you are testing. If the null hypothesis is true, it suggests that any changes witnessed in an experiment are because of random chance and not because of changes made to variables in the experiment.

The alternative hypothesis H1 is a theory that the observations are related (not independent) in some way. We only adopt the alternative hypothesis if we have rejected the null hypothesis.

Statistically Significant vs. Not Statistically Significant

-

Statistically Significant: If the difference between groups passes a certain threshold (usually set at a 95% confidence level), it is considered statistically significant. This indicates a high likelihood that the difference is real and can be attributed to a specific cause rather than random variation.

It is a determination that a relationship between two or more variables is caused by something other than chance. It is also used to provide evidence concerning the plausibility of the null hypothesis, which hypothesizes that there is nothing more than random chance at work in the data.

Generally, a p-value of 5% or lower is considered statistically significant.

-

Not Statistically Significant: If the difference does not meet this threshold, it is considered not statistically significant, implying that the observed variation could be due to random chance.

When the p-value is large, then the results in the data are explainable by chance alone. It is then inferred that the data is consistent with (while not proving) the null hypothesis.

How to Calculate Significant Difference

Calculating significant difference involves a few key steps and understanding some basic statistical concepts.

The Data You Need To Calculate Significant Differences

To mathematically calculate significant difference, you typically need:

- Sample data from two or more groups

- The mean (average) of each group

- The standard deviation of each group

- The sample size of each group

Fairing's significant difference calculator simplifies this. We contextualized our calculator specifically for your ease of use.

However at the root of it, you need to get your variables correct. This can be done by defining two hypotheses that you wish to compare:

- Hypothesis #1 has a control variable that indicates the ‘usual’ situation, where there is no known relationship between the metrics being looked at. This is also known as the null hypothesis, which is expected to bring little to no variation between the control variable and the tested variable.

- Hypothesis #2 has a variant variable which you are using to see if there is a causal relationship present.

What Are the Mathematical Formulas For Significant Difference

One common method if you were to manually calculate significant difference is using a t-test. Here's a simplified version of the process:

- Calculate the means (averages) of the two groups.

- Compute the standard deviation for each group.

- Determine the sample sizes of each group.

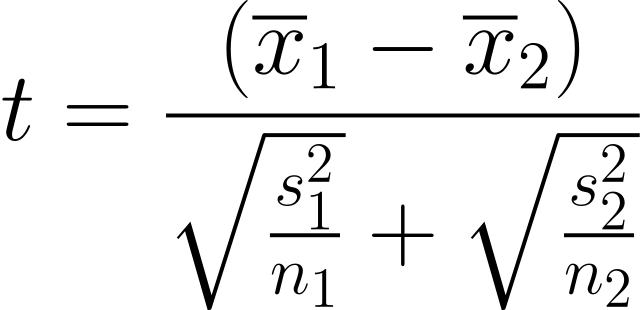

- Use the t-test formula to calculate the t-value:

Where:

- X and X2 are the means of the two groups

- s1 and s2 are the standard deviations of the two groups

- n1 and n2 are the sample sizes of the two groups

- Compare the t-value to a critical value from the t-distribution table based on your chosen confidence level (typically 95%).

What the Results Tell You

- If the calculated t-value is greater than the critical value from the table, the difference between the groups is statistically significant.

- If the t-value is less than the critical value, the difference is not statistically significant.

Best Practices When Calculating Significant Differences

To ensure accurate and reliable results, follow these best practices:

What Confidence Level Should You Choose?

The most commonly used confidence level is 95%, which corresponds to a significance level (alpha) of 0.05. This means you are 95% confident that the observed difference is real and not due to chance. In some cases, a 99% confidence level (alpha = 0.01) might be used for more stringent testing.

Minimum Number of Responses/Sample Size

A larger sample size increases the reliability of your results. While there's no one-size-fits-all answer, a minimum of 30 responses per group is often recommended. Smaller sample sizes can lead to less reliable conclusions.

Conclusion

Understanding and calculating significant difference is essential for making data-driven decisions in business. By using a significant difference calculator, you can easily determine whether the changes you observe in your data are meaningful or just due to random variation. Implementing best practices, such as choosing an appropriate confidence level and ensuring a sufficient sample size, will help you achieve more accurate and actionable insights.

Explore Fairing's B2B SaaS solutions for eCommerce to see how we can help your business thrive. Book a demo today, or check out our 1-minute product demo to learn more!

Ready to know your customers better?

Book a demo